AI AI AI AI AI

There's only one thing to talk about

Editor’s Note: I’ve been bad about writing over the last few months. I attribute this to my philosophy of only writing longform evergreen pieces. However, I have pressing things I’m thinking about or things aren’t worth an entire piece, so going to stitch them together in more of a newsletter style

If you’re tired about hearing about AI skip this one. I’m committed to writing about more esoteric things in the future!

Today the Tech industry is the Oil Industry in a Hoodie

The way the tech industry works is approximately:

- Hire some dudes with laptops to do a creative writing exercise and create some code.

- The code makes someone’s computer do useful things for them and they pay you in attention or dollars.

- There’s no costs to distribute or store the code so you spend that money on a nice office and stock it with snacks and maybe some merch to hire more.

- Even after the nicest office in the Bay and company trips to Lake Tahoe, every dollar in revenue earns somewhere between 50-90% of profit.

- Find a way to get everyone on earth to use the thing.

Behold the mighty tech capex:

The shape of step one can change a bit depending on what you’re trying to do. You might recruit some of your burned out friends from Google, convince a VC to give you money to hire, or a VP to make you a director of a new team at a FAANG, but at the end of the day every tech company is about the same.

Except! For the newest batch of AI companies that might go something like:

- Get money, preferably a billion or more

- Hire some AI researchers and a body guard with a gun to ward off Mark Zuckerberg.

- Back the truck up to NVIDIA and dump money into their office until they decide you can have some GPUs.

- Train some models — or just sip matcha with your friends — and wait like 3-6 months to raise a new round.

- If that fails, fire the bodyguard, get acquihired.

There’s some app level companies too, AI for X/X but with AI. If I’m honest I don’t think they really matter in the long run. They don’t own their models. They’re just doing product development for someone else.

If you’re an entrant like OpenAI/Anthropic or a lab within a company like Meta the plan is to build infra, datacenters, and then develop the model. There’s three tiers of players, Big Tech (Hyperscalers if you wanna sound cool), Neoclouds (Former crypto farmswilling to get loaded up with debt by NVIDIA), and the one chill one .

The spending on datacenters from all these players is where the rubber hits the road on AI today. There’s so much money flying around if you can capture a piece of it you’ll be very rich very quickly. If the demand grows as they expect your company will make insane amounts of money.

But now, we’re not talking about the tech playbook anymore! The initial investment isn’t guys in laptops, it’s buying a natural gas plant . This has caused real disruption as everyone tries to understand what the rules of the now game are.

This is only confusing if you were used to the golden economics of tech. These are the economics of heavy industry. The datacenters are AI factories and researchers do science which has uncertain payoffs.1 Everyone else is kinda useless for now.

The only difference between an AI datacenter and an Oil refinery is the expected value of the output. One will build God or automate all human labor or whatever instead of just replacing horses and making plastic.

This history of Oil even mirrors the datacenter build out, just much slower. Oil started out as a replacement for candles with real worries long term growth would plateau. Until the gasoline engine came along.

AI doesn’t have its gasoline engine so far.

The Janus Bubble

The current AI buildout is getting called an “industrial bubble”.

From what I can tell, an “industrial bubble” is when a new technology is so exciting that:

- The infrastructure to support it is overbuilt to the point where the industry collapses.

- Even though the investors lose the infrastructure remains, and demand eventually catches up.

The big ones are canals, oil, railroads, radio, and telecom. This is opposed to a “speculative bubble” like the Tulip bubble, or that fun french one which is just a mania with no productive outcome.

In this framing, “industrial bubbles” aren’t actually that bad. They’re just part of a particularly exciting business cycle. Since the AI buildout involves putting things in the ground, it’s gets put into the “industrial category” and is considered “fine”.2

This is a false dichotomy and even if you use the framing this buildout doesn’t fit into it.

The big issue with splitting different bubbles into these “types” is that every bubble has the same cause and effect. Speculation about something, then a capital black hole which collapses and causes widespread harm. The only difference is that in some cases the infrastructure happens to be useful years later.3

That’s survivorship bias not a fundamental difference.

I’m sure that if we were to ask the same crowd about government programs to build megaprojects that cause market distortions they’d have a lot of positive things to say, right?

Even given the framing of “industrial bubble”s existing, these datacenter investments just aren’t. The core piece of infrastructure, about 50% of cost, are GPUs. GPUs, which, go out of date or burn up every few years. Rail, fiber are build once use basically forever. We were lighting up dotcom dark fiber into the 2010s.

On the demand side, compute demand is high and datacenters run at max unlike fiber or rail. This means everything is fine according to some analysts.

I don’t think that is a good enough justification. AI labs are incentivized to keep usage high.

For a lab, researcher time is in short supply while money for compute is more or less infinite if they can keep delivering on benchmarks.

For the researchers themselves improving model performance means more funding, improving efficiency causes another Deepseek disruption and it’s why the chat apps are getting more expensive per query with reasoning. Would you rock the boat of your 250M pay package?

Even regular tech companies are begging their engineers to add AI features and corporate America more broadly is trying to force employees to use AI at work. That’s driving up demand even if it’s uselss.

Compute demand is the wrong metric to track for the long term prospects of current gen AI, it’s more elastic than rail or telecom. The right measure productivity gains. Anyone can create infinite compute demand by writing a five line program to produce random numbers in parallel.

This buildout looks like a government megaproject if you squint a bit. A few people who only see high level abstractions pump money to where it’s easiest to understand.

It’s very easy build a datacenter top down, map out a datacenter and track the build, but hard to build useful products that leverage it, science experiments don’t “progress” like a construction project.

A Tale Of Two Economies

The US consumer is stressed. Personally and financially. Credit card, auto loans, student loans and other consumer debt delinquencies are up sharply. Consumer sentiment is terrible. Wal-mart is courting upper income households who are feeling a squeeze while McDonalds says people are skipping breakfast. Bank balances are low, rents are up, construction spending is down, housing starts are down, the housing market is frozen.

It seems that every successful non-AI related company is a way to put groceries on layaway, or adding gambling to sports/brokerages/trading cards. There’s a sense of nihilism, especially in young people, that’s unprecedented including during world wars.4

Aggregate economic metrics still look, like, fine? Unemployment is holding strong, GDP growth is still expected, corporate earnings are good, there’s moderation in inflation, the stock market is hitting all time highs consistently. Even rate cuts are on the way! Roll the memes.

What gives? You already know it’s the AI narrative.

Jury is still out on if the narrative is true. Maybe it’ll have a significant impact, but right now it’s creating an economy where:

- Big tech announces it’s buying something AI related.

- Wall St shovels money into it.

- Households that own big tech stocks spend because of perceived gains

- Everyone else shoveling money into gambling to try to beat the rat race inflation has created.

Since big tech is like 40% of the SnP and a majority of the gains over the last few years, that rounds up to anyone with a large portion of their wealth in stocks. Those top 10% of US households now makeup about 50% of consumer spending and all net new spending in 2025.

There’s about 500B in GDP growth forecast in 2025. That’s about the same as Big Tech capex or the GDP of Norway. The full three year plan is about the GDP of Mexico or the entire COVID relief bill.

The US now has a Big Tech stimulus package. The AI buildout has Big Tech acting like a state owned oil company instead of tech companies. Massive amounts of money are being pushed into a small slice of the economy to build a factory whose product’s , labor automation, profits are getting distributed early to shareholders and broader society through stock gains.

The current market is valuing the upcoming automation as so obvious that we’re getting the reward now before any of the automation has actually happened.

Why does the market think this way?

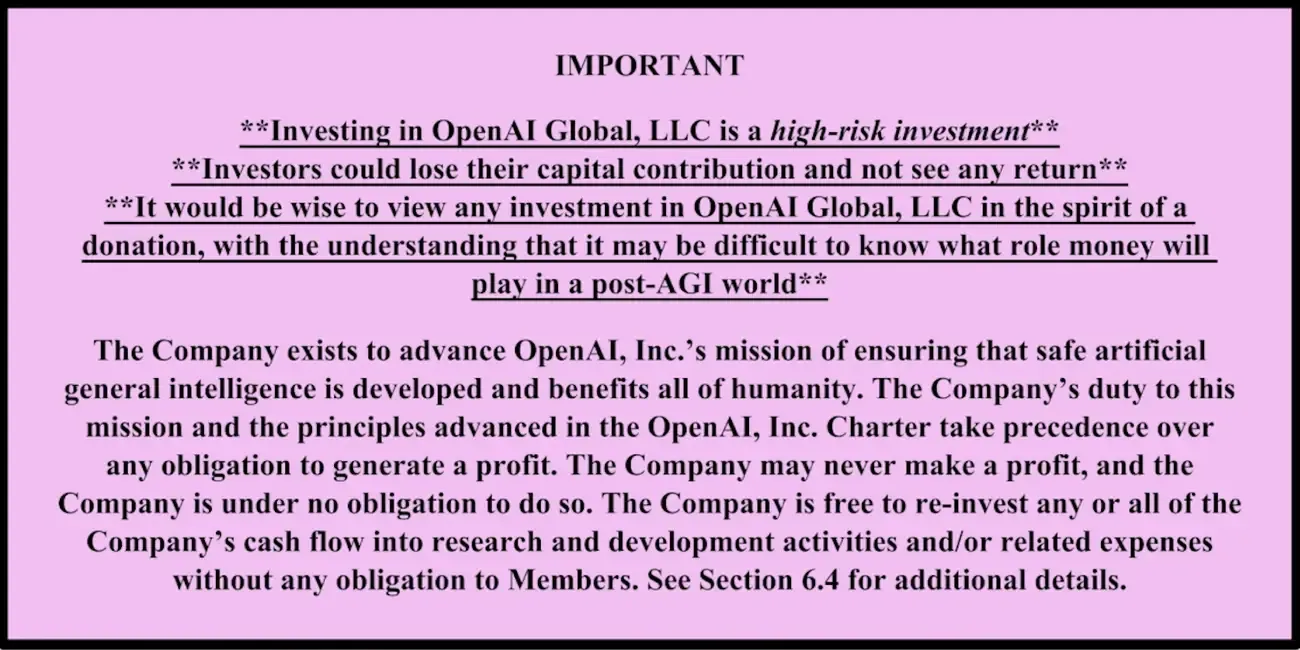

Well a few years ago VCs got the absolute perfect pitch.

There’s a company with a massive amount of talent, the fastest growing tech product of all time, and if we give them more money to buy NVIDIA chips and get data they say they’re going to build God. Maybe automate all human labor too. It may be difficult to know what role money will play in a post-AGI world after all.

All that company, sorry non-profit, needed was money. Lots of money. More money than every tech company in the history of tech.

Giving away money to fast growing companies trying to win some big market is the whole job. It’s even more perfect when they’re investing in a solution to a problem they understand, decoupling founders from their labor pool. No tech company is ever money constrained—they’re talent constrained—and this tech promised to solve that problem.

Fast forward to today, these goalposts have shifted just a bit.

The biggest canary in the coal mine is the switch to Reinforcement Learning over LLMs a few months ago.. RL requires building a test environment to train against some goal. That not a”we just need more data + NVIDIA” thesis anymore. It’s way more difficult and speculative compared the LLM one.

This looks like we’ve moved back to the pre-GPT world where AI research is more narrow and speculative. Any architecture generalizing to AGI soon is more improbable the longer this goes on. Even getting a single usecase that’s not ChatGPT is hard to mention right now, and we’ve been working on this problem for years.

Hard takeoff theory is out of vogue for tech media that is too boring for the money guys to watch. The AI scientific community outside of OpenAI has been doubting LLMs as the architecture to take us there for a long time.

So everyone in the industry is now doing this:

Satya Nadella, Sam Altman, Zuck, Daddy Jeff, every financial publication are all wondering who could have possibly done this. Who could’ve built datacenters the size of Manhattan in order to win this race?

Satya Nadella, Sam Altman, Zuck, Daddy Jeff, every financial publication are all wondering who could have possibly done this. Who could’ve built datacenters the size of Manhattan in order to win this race?

This always was a moon shot pitch. There now needs to be a multi trillion dollar pot of gold at the end of the rainbow.

For scale, we need to add a whole GOOGL and a whole MSFT to the economy. Even if OpenAI got all of Google’s ad revenue traffic tomorrow that’s only $284B a year. Not enough.

A lot created under the AI umbrella is useful, and if optimized maybe profitable, but it’s not enough to just have that. The promise is automation of entire industries basically overnight.

That’s just the economic angle, a lot of externalities for this buildout are folded under the “when AGI comes it’ll fix this for us” bucket.

When will we get the ROI? The answer so far is a solid, “Eh! Check back in a year”.

Okay. Clock’s ticking. If anyone decides to pull their AI spend things will go quite poorly for the entire US. Every other sector isn’t really growing right now.

Don’t try to be Michel Burry from the Big Short

Should you try to short this?

Big tech spending is what’s driving this, as long as the CEOs wanna spend they’ll spend. They’re willing to spend at least through 2026. Nothings stopping them from doing it or not. So unless you’re Priscilla Chan, no don’t bother. If you sleep next to Mark Zuckerburg that changes the math.

Plus, in a bubble you need to buy in to get returns on anything. There’s no demand for anything else! Bubbles suck up capital The Dotcom bubble contributed to manufacturing loss in the USA during the first China shock. No-one wanted to invest in manufacturing compared to the internet.

Trying to time the pop is the worst way to invest. You’ll put on your short and likely lose money while watching everyone else party. We’re all long things getting better over time, going short makes you want bad things to happen. Shorting companies requires either having a catalyst or creating one through research. The reason the Big Short worked was the catalyst of bad home loans came with a timer. There isn’t one here.

That’s before even considering the state of the US administration. The president has a soft spot for the tech industry, maybe the gold bars seemed to help. Tariffs, probably Trump’s favorite thing, currently exempt computer parts. You think he won’t bail out everyone who invested in this stuff? They’re bailing out, like, Argentina.

If this cycle and ‘21 have taught me anything it’s that everyone will always know when a bubble is happening and invest anyway.

I’ll leave you with a portion of Stan Druckenmiller’s “Biggest Mistake” about trading the ‘00-‘01 tech bubble. The whole thing is worth a read.

So like around March I could feel it coming. I just — I had to play. I couldn’t help myself. And three times the same week I pick up a — don’t do it. Don’t do it. Anyway, I pick up the phone finally.

I think I missed the top by an hour. I bought $6 billion worth of tech stocks, and in six weeks I had left Soros and I had lost $3 billion in that one play.

You asked me what I learned. I didn’t learn anything. I already knew that I wasn’t supposed to do that. I was just an emotional basket case and couldn’t help myself.

So, maybe I learned not to do it again, but I already knew that.

The right way to make money from bubbles is to spot one forming, pour money in as quickly as possible and take it out over time. Druckinmiller learned from his mistake.

Sloptok

The business case is pretty good for creating Sloptok.

Youtube/Tiktok/Reels et al act as brokers which connect the content creator supply side to consumer demand. This is such a profitable business that it pays to host every piece of video that someone creates forever and some very well paid engineering teams.

It could be better though! Content creators are a pain in the ass. They’re always complaining about their revenue share, their content pisses off advertisers, and giving random people fame with no agents is usually a recipe for a PR disaster.

From the view of the brokers, the core function of creators is to put the right colored pixels and sounds together at the right time. Imagine the possibility space of every combination of pixel and sound as a pile. Creators dig through those combinations and choose them to upload as content. This is usually path dependent. Each video will use similar design language to the previous one that worked for the platform, and their audiance.

So you can imagine them as excavators through this pile, with the recommendation algorithm functioning as a judge of quality of what they’ve excavated. The problem space is so vast that it’s impossible to automate them.

A first approximation if you were to try to in a pre-GenAI world you could:

- Generate every single combination of pixels on a screen Library Of Bable style.

- Try to sort them into something coherent.

- Serve that in pseduo real time based on user/advertiser preferences

That was a way harder engineering problem than just throwing a bunch of money at datacenter storage, hiring some good lawyers for issues and calling it a day.

Now the a multi-year multi-billion investment in research and infra is done. The path to me seems pretty straightforward since these platforms already have an algorithm which picks 30 second videos for their users.

To a first approximation:

- Generate videos based on what someone already watches.

- Use the recommendation algorithm to pick the best ones.

- Repeat until you have acceptable set of content for each user.

There’s a lot of technical challenges I’m glossing over, but take my word for it as an engineer that doing this would probably just require staffing up. The hard part was building the model.

Throwing Creators out a window is a big win by cost cutting, but in the long run algorithmically generated videos are probably better for engagement than recommended ones because:

- Generated video can target individuals instead of whole audiences.

- No physical limitations. If an advertiser wants to change the Pepsi can to a Coke one in a viral video? Go ahead no problem. No more ad breaks in videos.

- Are tigtly coupled with the recommendation algorithm. No more “tricks” to engagement hack audiances by creators.

The hardest part is owning the distribution the value accrues to the platform with the tool. If it can’t capture attention you’re just a driver of someone else’s revenue. You’ll have to go to war with Meta/Tiktok/Youtube in a big way. It’ll be hard but welcome to capitalism.

All that said, Sloptok is bad for society! Obviously! That’s not interesting or surprising. Dedicating billions of dollars and enough electricity to power a city to generate slop at a loss isn’t a social good. Likely falling into market failure territory along with short form video as a whole genre.

Software engineers viewing finance people with distain because they don’t “make the world a better place” feels quaint now. At least they’re making stock prices better instead of concocting ways to steal millions of human lifetimes worth of time on short form video. Stocks at least have some positive ROI.5

If we end up getting stuck at current state, tech hiring probably comes back in a big way. All of this new infra will be complicated.

Some people are wondering loudly if this is what a company that plans on automating white collar work in like 3-5 years would do. Maybe, but this is also what a company that hired a bunch of Meta PMs last year would do. Doesn’t change my AGI timeline much.

Private Companies Should Still Disclose Something

There’s a trend that private is the new public. Private Equity, private credit, and private companies are the big hotness in financial services/tech. Stripe is on series what now? G?

I don’t think this is a good thing. We shouldn’t put a bunch of functions in black boxes where friendly regulators can overlook things and then, when returns are bad, try to sell that black box onto retail as exit liquidity.

There could be some real risks hiding in the black box! Look at what happened with WeWork. The best info we have about private tech companies comes from leaks to The Information about “annualized” revenue which is maybe the single worst metric ever. Someone somewhere has an understanding of the finances for companies at this size right?

Even if it’s not the company itself, the investors should be doing some diligence? Hopefully?

If the only GDP growth in the country comes from funding your project maybe you can you publish a one pager with your balance sheet once a quarter. I don’t think there’s a real compliance burden there.

But considering who’s president, I don’t think that’s happening anytime soon.

- Chris

Footnotes

-

Training an ML model isn’t like creating a new tech product. Normally you’d ideate some features, implement them, and refine as you go. Model development is closer to regular ole science. Setup experiments, try them, and sometimes they go wrong. Industrial RnD, but it just happens to use code. ↩

-

“Boom” even makes the argument that bubbles like this are actually required to deploy world changing tech. It’s popular amongst VCs for obvious reasons. ↩

-

There’s an argument that power generation is what will actually persist. Even if chip demand subsides all the natural gas power plants will stay. We’ll see, but it’s almost certainly one of the worst ways to increase power generation grid wide. ↩

-

I know a lot of you live in places like SF where 100k is literally poverty, but we’re not talking about you! We’re talking about regular people in the rest of the country. Why should you care? Because if people are bored and broke they start doing more extreme things to remedy the situation. ↩

-

Stones. Glass houses. I know. ↩

Subscribe!

RSS feed